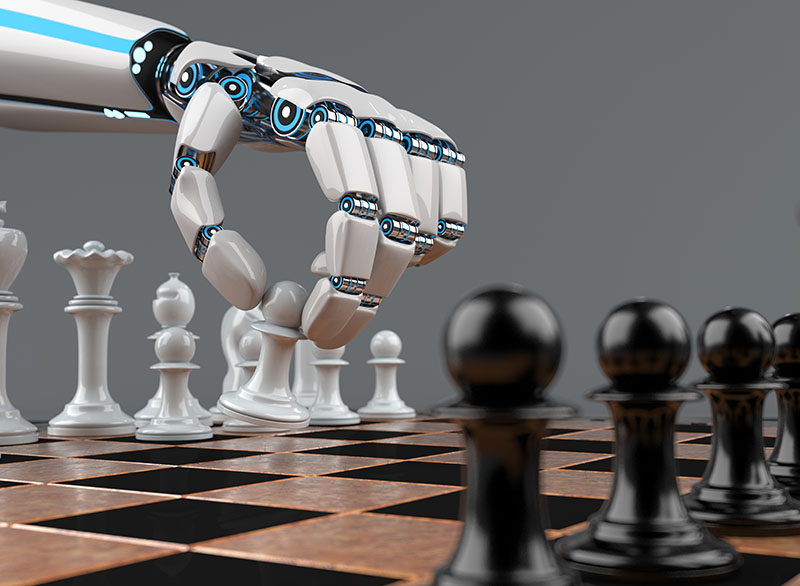

Artificial Intelligence has become the biggest buzz word in legal technology since, well the last biggest buzzword. ECA, TAR, Blockchain, Analytics, Big Data, Collaboration, Disruption, Innovation.

Every 6 months we have a new “big thing” and right now it’s AI.

Articles are constantly harping on the rise of the machines that AI portends. Gartner includes AI on their list of the top 10 strategic technology trends of 2019 and even estimates that 80% of emerging technologies will be built on a foundation of artificial intelligence by 2021. In a Law Technology Today article, Andrew Ng, Co-Founder of Coursera and Adjunct Professor of Computer Science at Stanford University, says AI is the new electricity. “Just as electricity transformed almost everything 100 years ago,” he explains, “today I actually have a hard time thinking of an industry that I don’t think AI will transform in the next several years.”

And in that same article, Ajith Samuel technologist and co-founder of eDiscovery company Exterro, says that:

1. Using legal artificial intelligence will become “frictionless,” meaning that it will be ever more seamlessly integrated into the e-discovery process.

2. Legal AI will move out of the review phase, earlier in the EDRM, helping legal teams get to the facts of the matter faster, cheaper, and smarter than ever before.

3. AI in law will play an increasing role in orchestrating the e-discovery process, streamlining the process and improving efficiency.

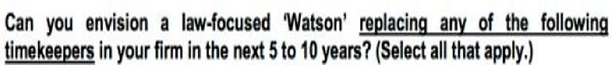

And let’s not forget the 2015 survey by Altman Weil of 320 firms with at least 50 lawyers on staff which found that 35 percent of the leaders at those firms (responding anonymously) believed some form of AI would replace first-year associates in the coming decade. An additional 20 percent believed that second- and third-year attorneys could also be replaced by technology over the same period while fully half the respondents said that paralegals could be totally eliminated by computers. (See graphic below)

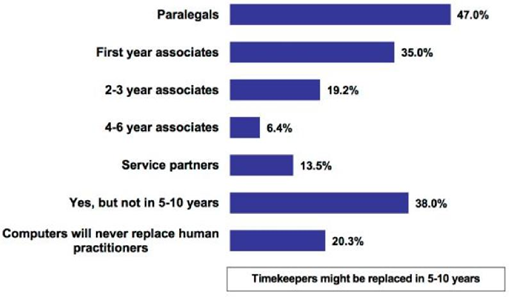

But if we are to believe the latest ILTA survey, that simply isn’t happening. The annual survey of the International Legal Technology Association was released on Nov 5 and this year’s survey reports answers from 537 firms, representing more than 116,000 attorneys and 240,000 total users.

With regards to AI, it finds that just over 50% of respondents are not “...presently pursuing any AI option” and only 25% are actively researching an AI option. Respondents with active pilot projects or study groups were 7% and 4% respectively with only 10% reporting an active AI tool deployment. See graphic below

So what is going on here? We hear lots of talk about AI but not much actual usage. Part of the problem is, I believe, definitional and actual definitions of AI are in short supply.

One recent article broke out AI into 6 categories.

- Due diligence – Litigators perform due diligence with the help of AI tools to uncover background information. We’ve decided to include contract review, legal research and electronic discovery in this section.

- Prediction technology – An AI software generates results that forecast litigation outcome.

- Legal analytics – Lawyers can use data points from past case law, win/loss rates and a judge’s history to be used for trends and patterns.

- Document automation – Law firms use software templates to create filled out documents based on data input.

- Intellectual property – AI tools guide lawyers in analyzing large IP portfolios and drawing insights from the content.

- Electronic billing – Lawyers’ billable hours are computed automatically.

And an actual standard (or standards) for AI has been slow to develop with the first just recently published by the Organization for Economic Co-operation and Development (OECD) which adopted and published its “Principles on AI” on the Law and AI blog.

But in all that discussion, where are the AI use propositions for eDiscovery? Well the problem there is that eDiscovery vendors are traditionally close mouther about their systems. And since a primary feature of legal artificial intelligence as mentioned by Ajith Samuel above is its “frictionless” adoption, then AI implementation is hidden by both design and practice. Legal technology has become more Fight Club than computer lab and AI in law has become the worst example of that proposition.

I’ve written before that all this emphasis on new technology brings to mind my old friend, the late Browning Marean, and his love for the writings of technologist and futurist Ray Kurzweil. In his book, The Singularity Is Near: When Humans Transcend Biology, Kurzweil proposed that upcoming advances in technology would transform people irreversibly through with genetic alterations, nanotechnology, and artificial intelligence.

I, on the other hand, have always felt more strongly about another aspect of the Singularity, the proposition that massive rapid changes in technology will outstrip people’s ability to absorb them. humans' ability to comprehend it. As I have said for years, people are slow and computers fast but people are smart and computers are dumb.

I am not alone in that belief. Stephen Hawking wrote an op-ed in The Independent in 2014 in which he said, “Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks..” Hawking went on to elaborate during a separate interview with BBC, that “humans, limited by slow biological evolution, couldn’t compete and would be superseded by A.I.”

Bill Gates has expressed similar concerns and during a Q&A session on Reddit in January 2015, said “I am in the camp that is concerned about super intelligence. First, the machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well. A few decades after that though the intelligence is strong enough to be a concern. I agree with Elon Musk and some others on this and don’t understand why some people are not more concerned.”

Is this concern valid? Looking at it from the perspective of the legal community, is there an ethical dilemma created by delegating legal responsibilities on robots? If we don’t truly understand how technology works, especially AI, are we abrogating our ethical duties to manage our client’s matters.

The ABA considered that very point as far back as 2013 in an article for Your ABA Enews called Duty to Supervise Nonlawyers: Ignorance is Not Bliss. Authors Peter Geraghty (Director of the ETHICSearch, ABA Center for Professional Responsibility) and Susan J. Michmerhuizen (ETHICSearch Research Counsel) focused on issues with paralegals and support staff, but I believe that technical experts and computers fall into the same category.

This issue arises most often when technical vendors run computer searches of documents and then produce documents directly to the other party. The potential non-supervised release of privileged material can be catastrophic and, indeed, prompted Geraghty and Michmerhuizen to note this excerpt from Comment [3] to Rule 5.3 which states:

... Nonlawyers Outside the Firm

[3]A lawyer may use nonlawyers outside the firm to assist the lawyer in rendering legal services to the client. Examples include the retention of an investigative or paraprofessional service, hiring a document management company to create and maintain a database for complex litigation, sending client documents to a third party for printing or scanning, and using an Internet-based service to store client information. When using such services outside the firm, a lawyer must make reasonable efforts to ensure that the services are provided in a manner that is compatible with the lawyer’s professional obligations.

So when it comes to artificial intelligence and the law, do you really understand how the program you are using works and are you really actively supervising in a knowledgeable manner? Attorneys can no longer delegate to others, staff, clients or vendors, the responsibility of understanding technology. In todays landscape of a specific duty of technical competence, the “Fight Club” approach to AI will not stand up to scrutiny.

Technology is a tool and the responsibility for using the proper tool in the proper manner resides ultimately with the attorney. As I have said before, we need to keep the attorney in AI.

It’s not enough to be aware of AI, we have to understand AI. Or, as the great technologist Elvis Aaron Presley once said, “A little less conversation, a little more action please.”

-1.png?width=400&height=164&name=DWRLogoClassic%20-%20Copy%20(2)-1.png)

Comment On This Article